Learn how to automate administration with HashiCorp Terraform

VPC Service Controls provides a way to limit access to GCP Services within your Organization

TL;DR Together we’ll explore VPC Service Controls through an example of a common use case of VPC Service Control perimeters, deep dive on some key concepts, and learn how to automate administration with HashiCorp Terraform.

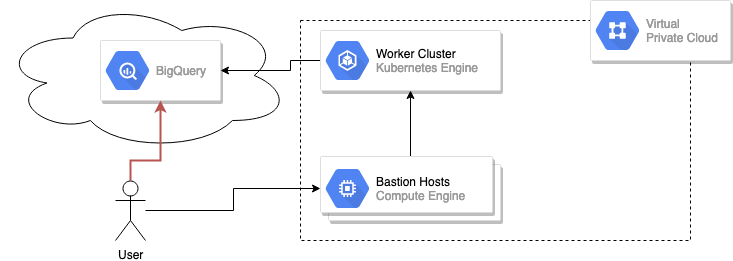

BigQuery Example

Let’s start with an example. Say we have the following architecture, where we have a VPC which one can connect to using one or more bastion hosts. Also, let’s say there’s a GKE cluster that needs to connect to BigQuery for some data analytics workload.

Without VPC Service Controls, the user is always able to reach BigQuery since it is on the public internet. The only thing keeping this user out is IAM permissions. Let’s say one of the analysts accidentally commits a service account credential with BigQuery access to GitHub. This is not a contrived example and happens all the time. Once those credentials are out in the open, anyone with them can access BigQuery from anywhere in the world.

One might think that there should be a better way around this since Google datacenters house both your VPC and the BigQuery service as well. That way would be VPC Service Controls.

VPC Service Controls

Typically when you make an API request against a GCP resource such as a GCS bucket, PubSub topic, BigQuery Dataset, etc. that request is resolved to an external endpoint protected with IAM policies. This means that if IAM policies are configured to allow it, other projects and even other organizations could access those endpoints since they are in fact publicly facing. VPC Service Controls offers a way to specify a trusted perimeter around projects at the organization-level such that only projects within that perimeter are allowed to communicate with your GCP resources. This is accomplished at the network layer of VPCs owned by your project, hence the name.

From a security perspective, this is incredibly beneficial because it drastically mitigates several risks including:

-

Access from unauthorized networks using stolen credentials

-

Data exfiltration by malicious insiders or compromised code

-

Public exposure of private data caused by misconfigured Cloud IAM policies

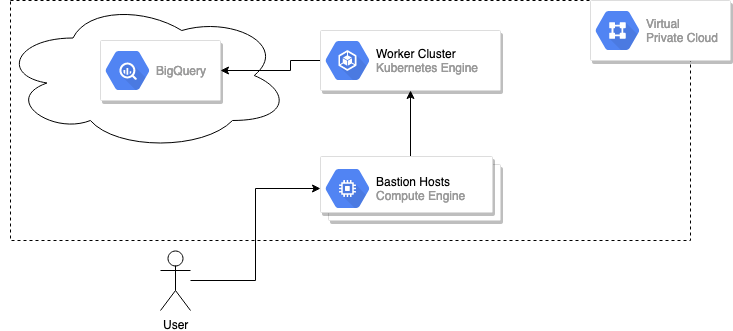

Back to our above BigQuery example. If we were to implement VPC Service Controls, with a perimeter (back to this term later) around our project and BigQuery, it would look something like this.

BigQuery Example with VPC Service Control

Notice how now the user who wants to access BigQuery has to enter through the bastion or some other means? This effectively cuts off BigQuery from the internet by enforcing that only the whitelist of projects you select can access it. Obviously BigQuery is just an example, but not all services are supported. As of this writing, the following are supported:

- Cloud Dataflow

- Cloud KMS

- Cloud Spanner

- Google Kubernetes Engine API

- Google Kubernetes Engine private clusters.

- Cloud Bigtable

- Cloud Storage

- BigQuery

- Cloud Pub/Sub

- Cloud Dataproc

- Stackdriver Logging

- Container Registry

Perimeters

Perimeters are how VPC Service Controls reasons about the relationship between projects, services and policies. When you create your first perimeter, you’ll be asked to select which projects you want in the perimeter and which services those projects should be able to access. Everything inside the perimeter is considered trusted, meaning that all the projects inside one perimeter will be able to access the resources of the selected services only bound by IAM. To put it another way, projects in the perimeter are not blocked from protected services at the network. When creating this perimeter, you may notice a few other options: Perimeter Type and Policy, we’ll talk about the latter first.

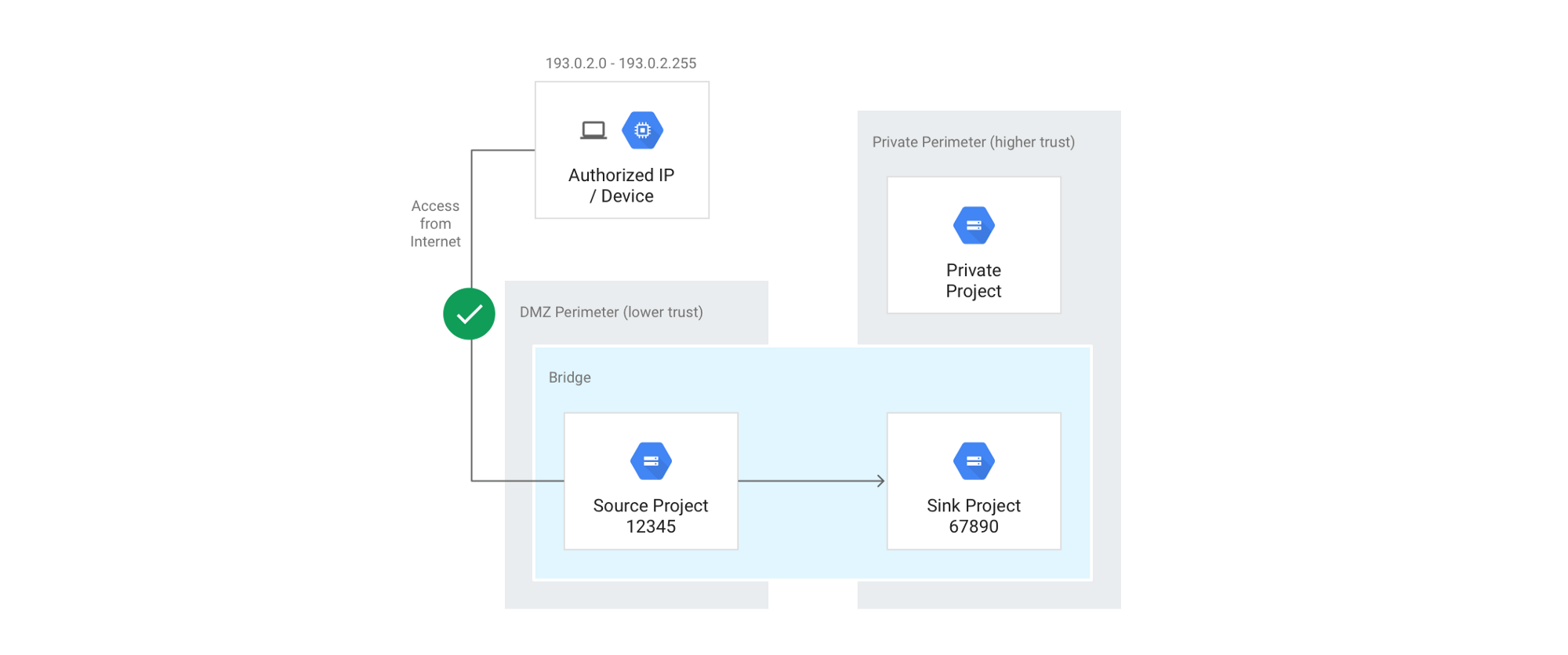

Access Context Manager

Access Context Manager or ACM, allows you to define fine-grained, attribute based access control for projects and resources in GCP at the organization-level. There are a few terms to be aware of here, Access Level and Access Policy. An access level is an attribute that you can make conditions upon, such as IP address, device type and user identity. As an example, we could enforce only allowing access to a project from a particular CIDR range corresponding to your office network. VPC Service Controls are tightly bound to ACM. Just as you can whitelist projects that are within your perimeter, you can also whitelist policies. Attaching an ACM policy effectively widens the perimeter to include those users who might not come from the projects specified in the perimeter. For example, to allow a Service Account attached to a Cloud Function to talk to a project protected by a VPC Service Control perimeter, you could add a User Identity policy that whitelists that service account.

The fact that Access Context Manager policies are context and identity aware mean it provides a way to build towards Google BeyondCorp model of zero-trust networking. This can be an added layer of security on top of Identity aware proxy (IAP) as well.

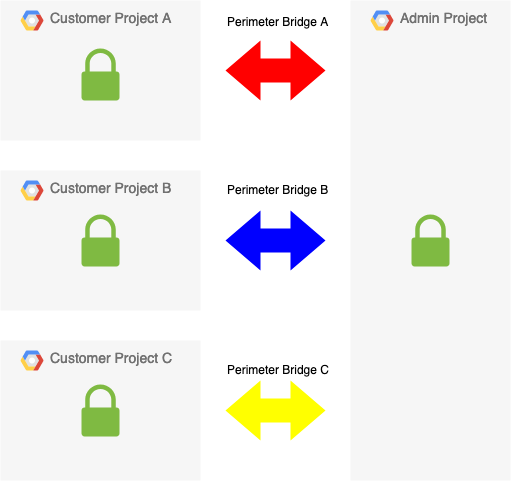

Perimeter Bridge

Back to the configuration of your perimeter, you may notice the option to create a perimeter bridge as a type of perimeter. What this does is similar to ACM in the sense that it extends the trust boundary to include other perimeters. You are able to bridge only a subset of projects between two perimeters, and by doing so those projects will have access to resources within each other, again only limited by IAM.

Source: https://cloud.google.com/vpc-service-controls/docs/share-across-perimeters

Perimeter bridging allows you to do some very interesting things with project isolation. Let’s say you needed to ensure that projects were separated from each other for regulatory reasons, as an example, you may have a SaaS application where each project represents one customer and the data should never touch in this multi-tenant environment. You would still, however, need at least one project as a single source for logging, monitoring, administration, etc.

In this solution, we can use perimeter bridges to form a kind of hub and spoke pattern where each project has no access to each other’s resources but yet the Admin project can talk to all of them for things like running Terraform, collecting logs, etc.

Automation

Whenever making security related changes to your cloud infrastructure, it’s critically important to make a best effort at repeatability and automation in the form of Infrastructure as Code (IaC). This has the benefits of not only allowing you to audit changes over time but control the entire change management process. It also enables you to run security tests against code changes to infrastructure as you would to any other piece of software. The industry standard tool for IaC is HashiCorp Terraform.

When using Terraform for automation of these service controls, you can make use of the following resources:

As an example:

Note that since the google-beta provider is necessary for these resources, the provider must be specified in the resource declaration.

Restricted APIs DNS

You can go a step further with VPC Service Controls as well ensuring that even DNS resolution does not happen on the public internet with private Google access. This uses what is called the Restricted VIP (Virtual IP) range, which is a DNS server restricted.googleapis.com that is only reachable internal to your VPC. You can read more about how to setup DNS for Private Google Access in the docs. The TL;DR is that if you want your API requests to be routed internally, you can configure your DNS server to resolve *.googleapis.com as a CNAME to restricted.googleapis.com.

Conclusion

We’ve taken a high level look into VPC Service Controls and what they can provide. We gave the example of Big Query and then dove into a discussion on Perimeters, Access Policies and Access Levels. We then looked at how Terraform can help automate this whole process. Stay tuned for more on Cloud Security from ScaleSec.