Data Loss Prevention Services in Google Cloud

As data continues to be arguably the most valuable currency of our time, protecting sensitive data remains at the forefront of organizations’ security concerns. Data Loss Prevention (DLP) refers to the suite of tools and strategies created to address those concerns head-on. The goal of DLP is to monitor for sensitive data types and prevent them from traversing defined security boundaries (both in transit and at rest). With the emergence of Cloud Service Providers (CSPs) such as Amazon Web Services (AWS) and Google Cloud Platform (GCP), strategies for DLP have evolved with a mixture of cloud-native first party and third-party services. This article will cover first-party DLP strategies in GCP.

Cloud Data Loss Prevention

The first service that comes to mind when one thinks of first party DLP offerings in GCP is Cloud Data Loss Prevention. This service can scan for matches using custom definitions or built-in detectors for data types (infoTypes) including names, emails addresses, telephone numbers, and credit card numbers. Two main scanning jobs are available: inspection to find sensitive data, and de-identification to remove sensitive data.

Inspection jobs are designed to find where sensitive data lives within the environment, as data often ends up in unforeseen locations. Once data is discovered, multiple actions can be taken including sending results to BigQuery for further analysis or Pub/Sub for streaming to another service. Google Cloud offers an interactive way to perform sample discovery queries and view sample results here. Inspection is helpful for determining if data lives in environments with the proper security controls, but architecting and enforcing those controls is a separate process.

De-identification jobs offer security and compliance benefits by transforming data using several methods. For example, Pseudonymization (sometimes referred to as Tokenization) is a de-identification technique where sensitive data values are replaced with cryptographically generated tokens. This process could be one-way (hashing), with an irreversible cryptographic transformation, or two-way, with a reservable cryptographic process. Redaction is another de-identification option, where sensitive data is replaced with a custom string once detected. This is another one-way process that involves making the original data unrecoverable, though it is not cryptographic in nature like Pseudonymization.

A deeper dive into the Cloud DLP service and some common use cases can be found published by ScaleSec last year. While the service offers many benefits, there are also some gaps from a DLP program perspective: How can organizations protect the data from leaving the organization and being subject to cryptographic attacks? How can data that has not yet been de-identified by a DLP process stay within defined security boundaries? How can controls be placed around sensitive data with unique or random patterns? In other words, while the Cloud DLP service has a lot of benefits it does not handle the exfiltration portion of a comprehensive DLP strategy. That niche is best addressed by VPC Service Controls.

Data Loss Prevention on Google Cloud

VPC Service Controls

The VPC Services Controls offering in GCP was created to mitigate data exfiltration risks and keep data private inside defined VPC boundaries. At a high level it involves creating a service perimeter around defined and supported Google resources, namely projects and services. By default, communication within the perimeter is allowed while communication to/from the perimeter is denied for restricted resources. This accomplishes multiple objectives. First, data cannot be exfiltrated by copying to services outside the perimeter, such as a public Google Cloud Storage (GCS) bucket or an external BigQuery table. Second, access from unauthorized external networks are not allowed even if IAM permissions or compromised credentials are utilized. Ingress and egress rules can be created to allow for certain external services or users to gain access to resources within the perimeter in order to make the environment tightly controlled but still functional. Keep an eye on the ScaleSec blog for a deep dive into VPC Service Controls.

Putting it All Together

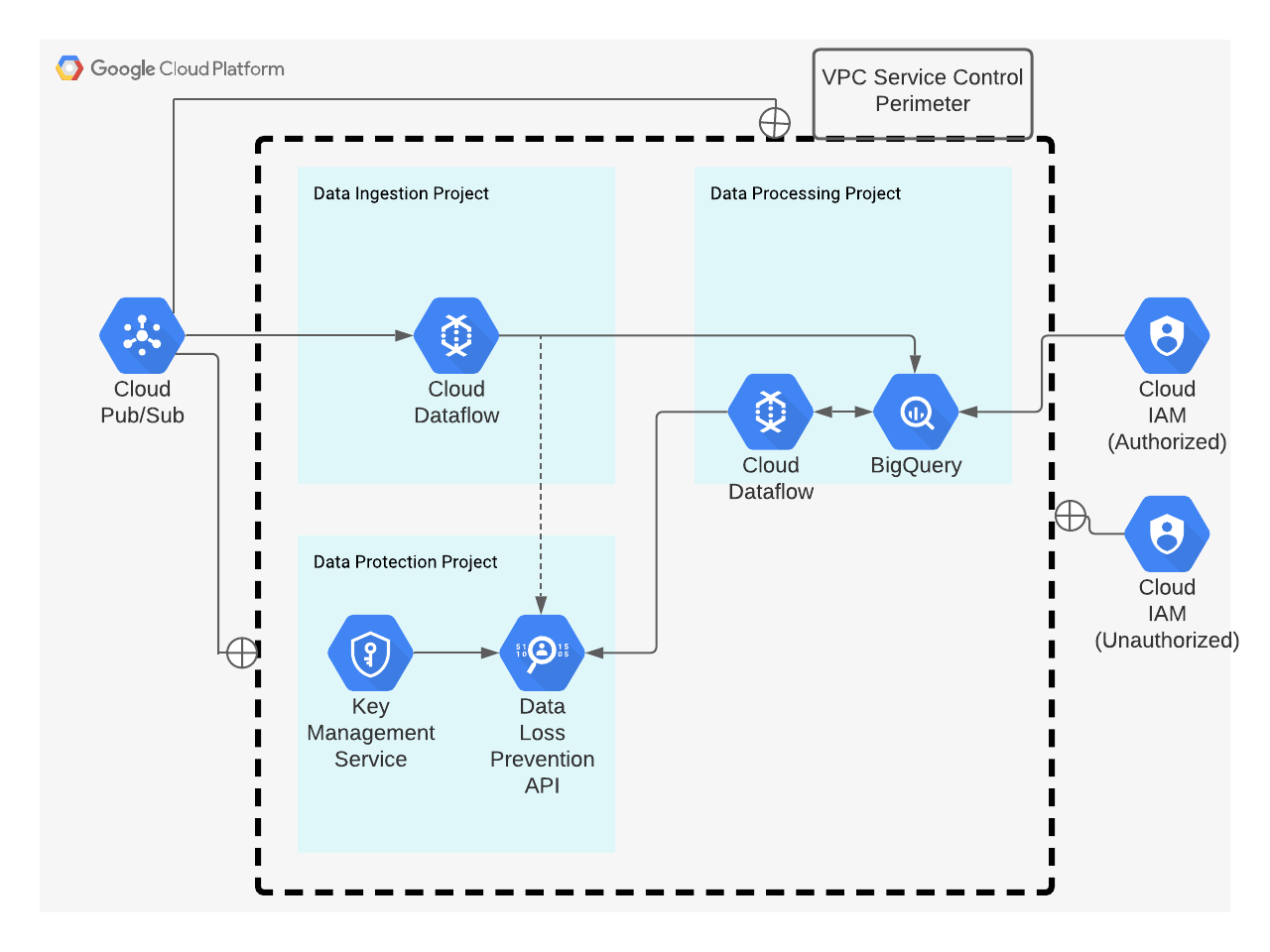

Consider the following example of a data processing pipeline:

Example of a data processing pipeline

Three projects are created for data processing: one for data ingestion, one for data protection involving Cloud DLP, and the other for the processing workload. A VPC Service Control Perimeter is created that encompasses both projects, allowing for free communication between them but no default communication across the perimeter boundary. VPC Service Control access levels are created to allow for a Pub/Sub topic to transfer sensitive data across the VPC Service Control Perimeter and to the data ingestion project only. A Dataflow job reads each Pub/Sub message and then engages Cloud DLP for two-way de-identification, cryptographically tokenizing sensitive data based on applicable InfoTypes. Dataflow then ports the de-identified data to BigQuery in the data processing project for analysis. Another VPC Service Control access level is granted to specific users so that they can only view BigQuery (which contains de-identified data only) in the data processing project. If any use cases arise where re-identification of data is needed, Dataflow can send the required data to Cloud DLP in the data protection project to be re-identified.

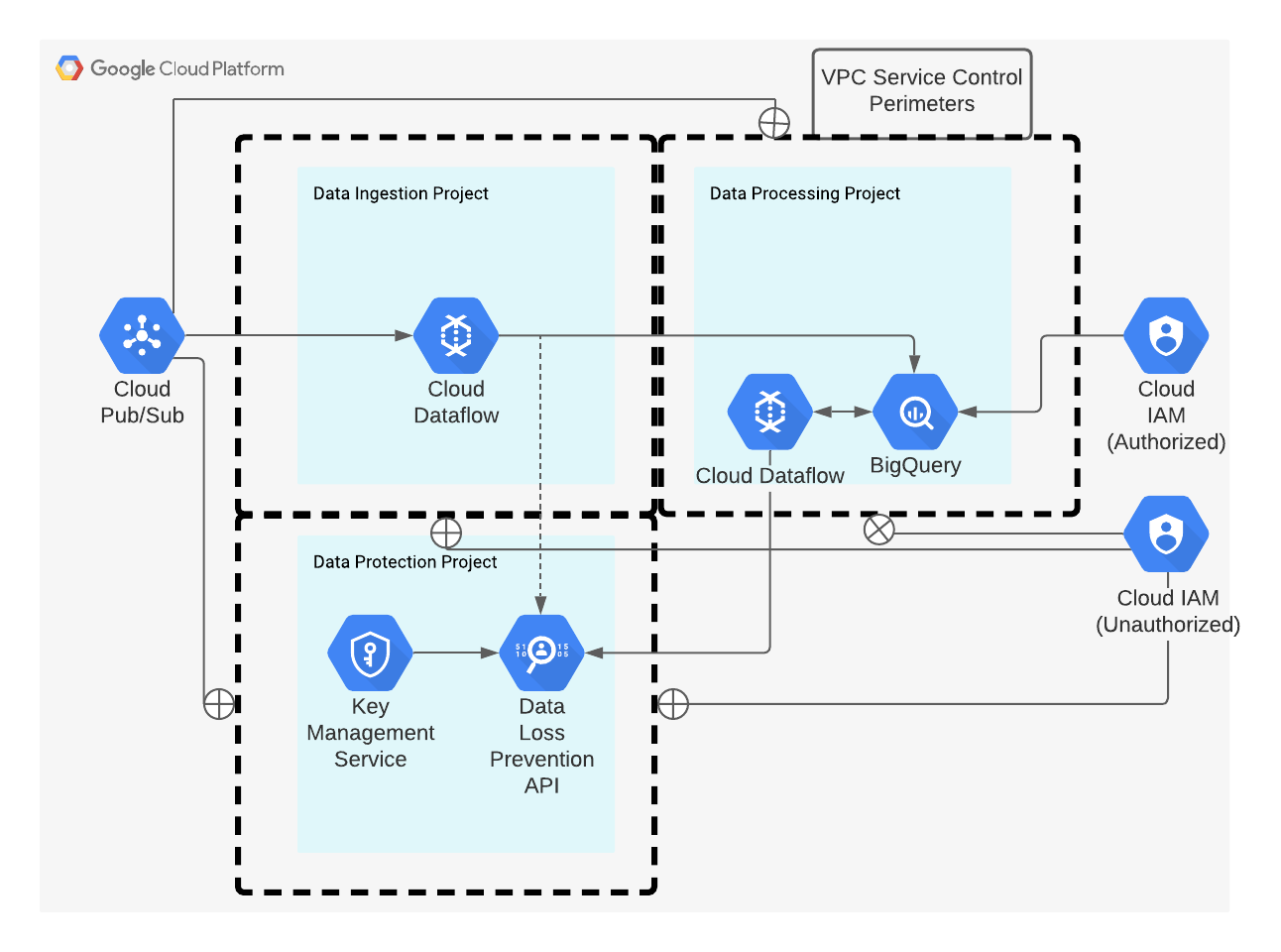

The scenario described above is just one example of many. Due to the flexibility of VPC Service Controls, other options such as creating a service perimeter around individual projects in a workflow are also possible. While this option may require more management overhead, it also has the benefit of greater control over what services are able to communicate across restricted projects.

Service perimeter created around individual projects

Conclusion

While solid basics such as right sized identity and access management (IAM) policies and network security rules, and encryption in transit and at rest form the basis of a sound data protection strategy, additional layers are often needed for a comprehensive DLP solution. Cloud DLP is a bit of a misnomer in that it can address the data discovery and data masking aspects of a DLP strategy, but does not address the exfiltration piece. VPC Service Controls fill the gap by addressing data exfiltration. VPC Service Controls and Cloud DLP can work in conjunction to address the multiple facets of a DLP strategy.