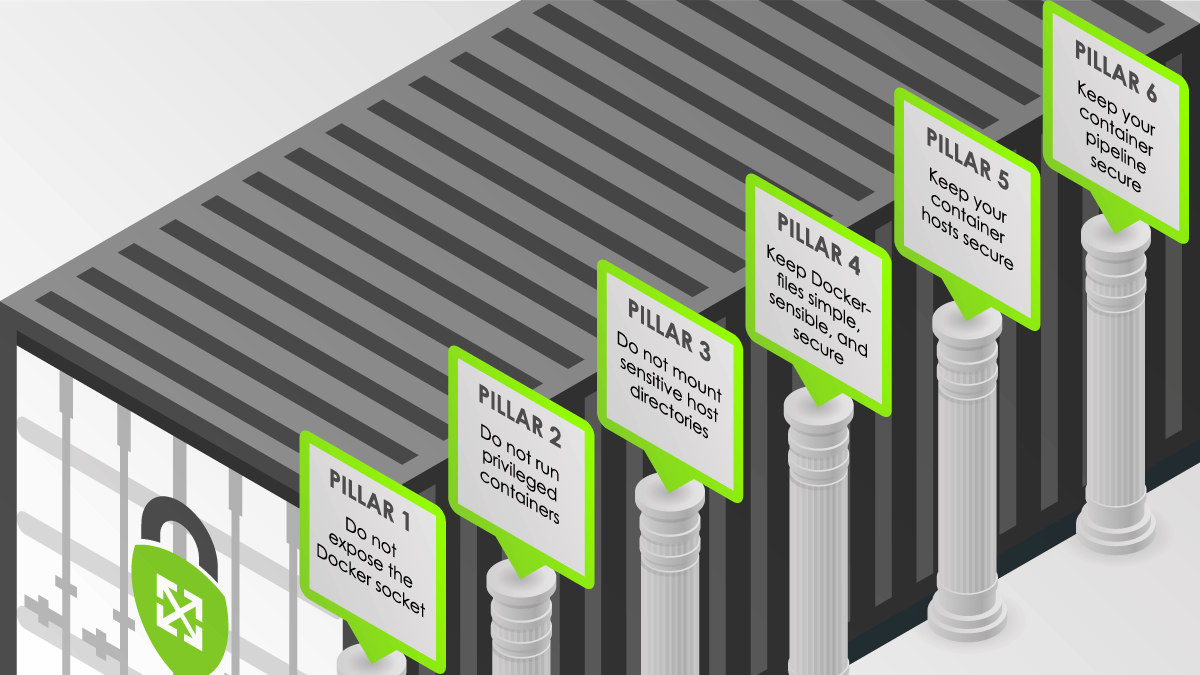

The Pillars of Secure Containers

Fueled by a constant desire to increase development velocity, cloud computing gained traction around 2007. This marked the first step of a fundamental shift in the way applications were developed. The second step was the birth of containers, inspired by the flexibility of cloud computing and the thought paradigms that came with it, yet containers enabled platform and cloud agnostic workflows.

The idea of a Linux container, now just commonly referred to as a “container”, was born around 2008 when Google’s cgroup patches were merged into the Linux kernel. LXC, the first container manager, was released shortly after. In basic terms, a container is an abstraction of the kernel limiting what a process can do (cgroups) and what it can see (namespaces). Containers received widespread attention when Docker released its tools around 2013. Docker quickly moved away from LXC and created its own container manager which automated things like image management, volumes, networking overlays, and much more. Docker abstracted the hard stuff and made it possible to run containers at scale with a few commands.

Containers are not virtual machines. They do not run their own kernels and share all of the host’s resources. Because of their popularity and extensibility, containers run all aspects of a typical cloud environment: backend APIs, front end web services, even databases. Containers also enable seamless hybrid and multi-cloud setups with many options for rapid lift and shifts. The consequence of their popularity means containers require a solid security strategy to be applied in multiple layers of the application lifecycle, i.e., defense in depth. Containers provide a way to package an application as a single artifact and run it without worrying about the underlying host… or so people thought. This article will explain how to apply defense in depth to your containers with six pillars of container security.

Pillar 1

Do not expose the Docker socket

The Docker unix socket is usually located at /var/run/docker.sock and is owned by root:docker. Exposing the socket can be done in a few ways:

- Mounting the socket into a container

- Changing socket ownership

- Adding users to the

dockergroup

An exposed socket can be used by the Docker client or curl to run arbitrary Docker commands, including starting containers. In some cases you can mount arbitrary host volumes and escape a container sandbox.

Pillar 2

Do not run privileged containers

A container is considered privileged if the UID inside the container is 0, it was started with the --privileged flag, or had capabilities added.

A privileged container is a security risk.

A container is not a security boundary by itself. By running a privileged container, you remove the security boundaries that the container engine imposes on it (seccomp, LSM, host/container isolation) and therefore create a risk for other containers on the host, or the host itself. Containers with --privileged can modify the host, such as installing a kernel module. Capabilities (another kernel security feature) can create a security risk as well, but there are some edge cases where they are required. If so, make sure to isolate those containers to their own hosts.

Finally, never run a container as root. While in many cases, root in a container will not result in a container escape on its own, it still creates an opportunity for an attacker to leverage root access to escape. As mentioned earlier, a layered approach to security is essential in stopping attacks.

For docker, specify the USER directive in the Dockerfile and add a user created specifically for the container.

| # Set a home directory specifically for this container | |

| ENV WORKDIR="/app" | |

| # Create the user, our app directory, and set the owner | |

| RUN useradd -s /bin/bash --no-create-home app && mkdir -p ${WORKDIR} && chown -R app:app ${WORKDIR} | |

| # run as app user | |

| USER app | |

| # set our relative directory to /app | |

| WORKDIR ${WORKDIR} |

On Kubernetes, you can add the MustRunAsNonRoot directive to a pod security policy to prevent pods from running as root.

Pillar 3

Do not mount sensitive host directories

Building on the second pillar, mounting sensitive directories can result in container escapes or data disclosures if it also runs as root. If a privileged container has /proc, /sys, or similar directories mounted, it can leverage those to escape the container or read data owned by other users, since the user inside the container is root.

Pillar 4

Keep Dockerfiles simple, sensible, and secure

Container security starts in the Dockerfile. Some easy to implement security measures include:

- Ensuring a container runs a single application or task

- Containers are updated as the first step (apk upgrade, yum update, etc)

- Ensure this is done first and in a single step otherwise the cache will not update package sources

- Only trusted base images are used

- Don’t build from or launch user made Docker images. Use trusted sources and pin the tag to a known version

- Ensuring credentials are not stored in images

- No credentials should be baked into the container. Any secrets should be ingested at runtime using an SDK (ideal) or an environment variable (less ideal)

- Ensuring the minimum packages are installed

- Don’t add development or test packages in the production image

- Considering using “slim” or Alpine tags if available

- Many images provide a slim or Alpine tag to minimize the packages installed. Keep in mind that Alpine images tend to have issues with applications requiring glibc, namely Python

- Distroless is another option for even smaller attack surfaces

Here is an example Dockerfile based on the principles discussed above:

| FROM python:3.8-slim | |

| # Update and install deps. Install packages the app needs and remove list cache to save space. Notice this is done in one layer | |

| RUN apt-get update && apt-get upgrade -y && \ | |

| apt-get install zip unzip -y && \ | |

| rm -rf /var/lib/apt/lists/* | |

| # Set a home directory specifically for this container | |

| ENV WORKDIR="/app" | |

| # Create the user, our app directory, and set the owner | |

| RUN useradd -s /bin/bash --no-create-home app && mkdir -p ${WORKDIR} && chown -R app:app ${WORKDIR} | |

| # run as app user | |

| USER app | |

| # set our relative directory to /app | |

| WORKDIR ${WORKDIR} | |

| # Copy app files | |

| COPY --chown=app:app src/* ${WORKDIR}/ | |

| # Set an entrypoint and cmd | |

| ENTRYPOINT [ "some entrypoint script" ] | |

| CMD [ "command to start" ] |

Pillar 5

Keep your container hosts secure

Many container escapes that don’t rely on privileged containers are a result of kernel exploits. This means you should be keeping your hosts up to date with the latest patches for all system packages including the kernel and container engine. As mentioned before, don’t add users to the Docker group unless they explicitly need to interact with Docker as their user. Additionally, do not change the owner or permission set for the Docker socket.

Pillar 6

Keep your container pipeline secure

The last steps of a container lifecycle are the pipeline and deployment. Consider implementing container vulnerability scanning into your pipelines. Many cloud container repositories such as ECR or GCR have built-in container vulnerability scanning. On the deployment end, considering signing images and requiring running images to be signed. Also consider the benefits and tradeoffs of a sandboxing solution such as gVisor.

What’s Next?

Integrating these six pillars into your container workflows will greatly decrease your attack surface. However, this is just the first step in your overall cloud security posture. Think about reducing access scopes for these containers with our blogs on IAM and least privilege. Ensure you have logging set up with insights into your containers and hosts. Manage secrets and passwords securely on GCP and AWS.