Refactoring by Example for Security Engineers

Introduction

As enterprises migrate more workloads into the cloud, security engineering teams can also benefit from adjusting their tools and workflows to take advantage of the lower cost of ownership and scalability that cloud brings. Moving legacy utilities to the cloud can be daunting at first; some engineers begin reading the 12-Factor for microservice best practices, cloud migration strategies, and many other methods of security transformation.

This article will help security teams that have legacy tooling replatform or refactor in a hands on example of a threat detection engineering workflow, and Cloud Service Provider (CSP) agnostic.

Legacy Solution Workflow

A common practice for many security teams is to perform regular threat hunts. In those hunts, it’s commonplace to extract artifacts that can produce meaningful Indicators of Compromise (IOCs). One workflow that tends to intertwine with others during Digital Forensics and Incident Response (DFIR) is to perform static analysis of suspected malicious binaries.

The analysis usually results in IOCs such as unique strings and opcodes that can be exported to common formats such as YARA.

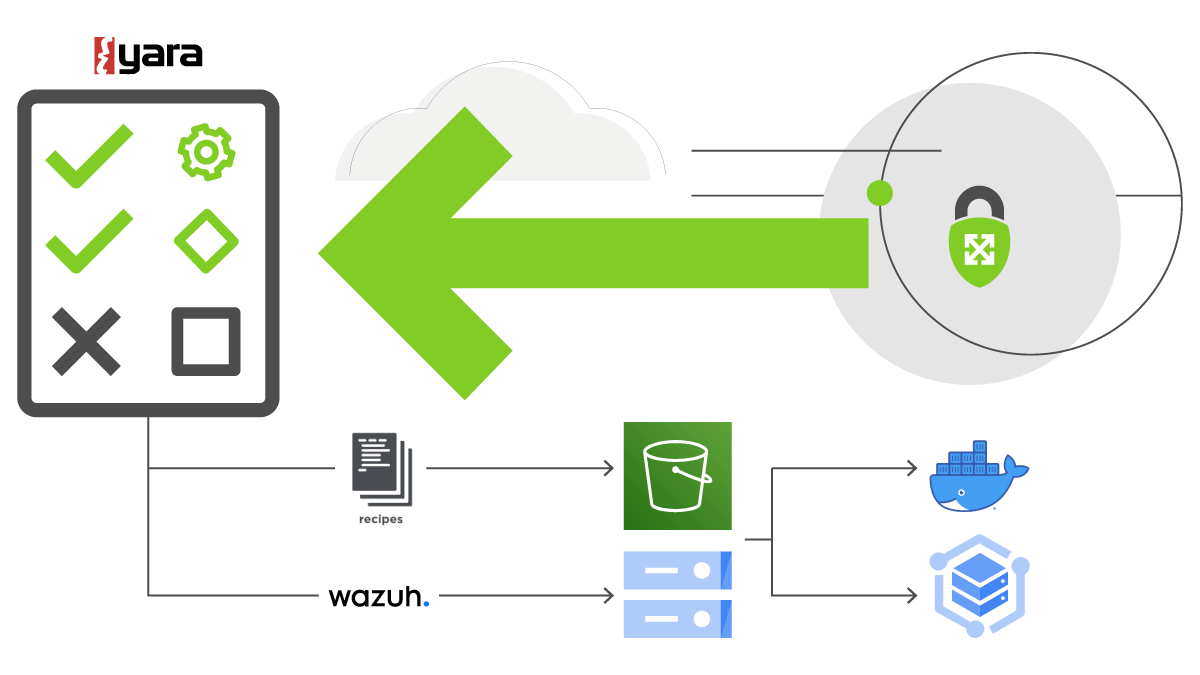

Malware binaries

In the above diagram, a basic workflow that is easily automated is the result of security teams extracting and usually unpacking suspected malware binaries. Not all binaries are truly malicious; such as Potentially Unwanted Programs (PUPs). PUPs sometimes trigger anomalous events.

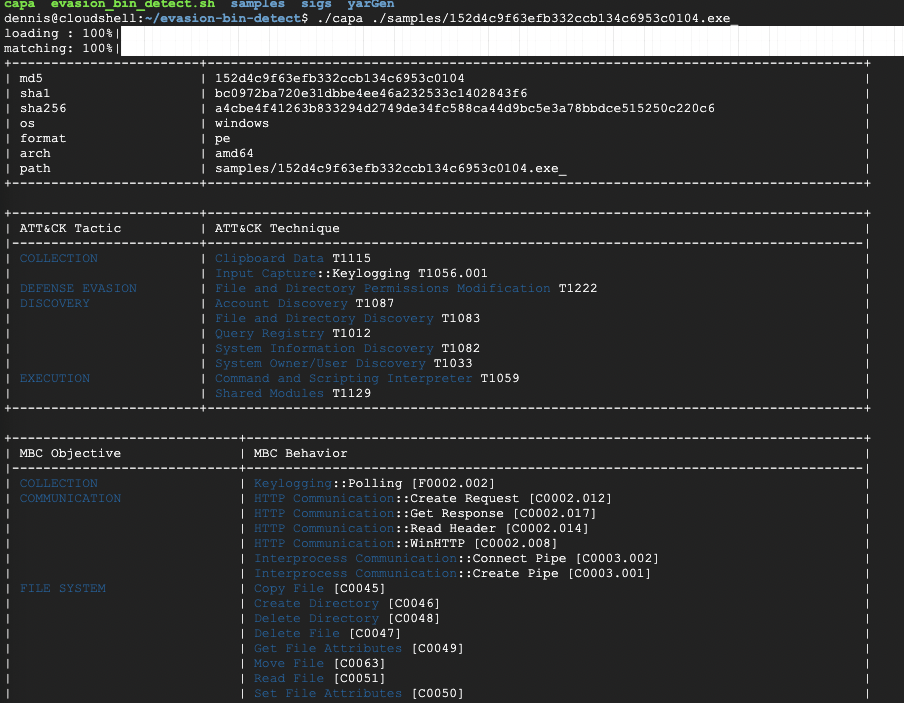

Analysts and threat detection engineers will usually use tools to perform static or file property checks and filter known capabilities and payload on high fidelity areas. One such example is input capturing of the victim through Keylogging which is a known TTP (T1056.001) of the MITRE ATT&CK framework.

Capa |

yarGen |

Free available tools such as Capa from Mandiant (now part of Google Cloud) and yarGen are commonly used to make decisions and generate threat detection signatures to be used by endpoint solutions or, in some cases, Real time Application Security Protection (RASPs). A sample output of the Capa tool is shown below:

Sample Capa tool output

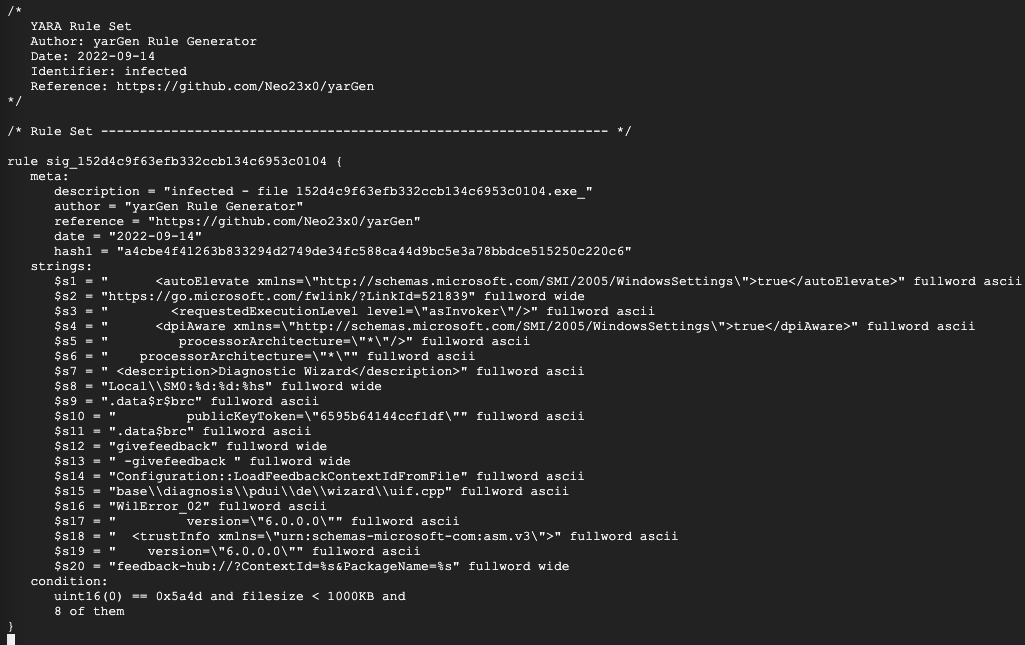

A security analyst or engineer could easily automate some of the criteria of selecting a higher confidence set of known malicious behavior sets and kick off the YARA generation process. The following is a screenshot of the output of yarGen default rule templates:

Output of yarGen default rule templates

Example Legacy Code

An example script to do as such in a batch like fashion iterating through the directory can be found below:

#!/usr/bin/env bash #REQUIREMENTS:

#Python 3.9 runtime installed. yarGen cloned, venv activated, dependencies installed, and yarGen database updated

#Test with files at https://github.com/mandiant/capa-testfiles

#Example: wget https://github.com/mandiant/capa-testfiles/raw/master/152d4c9f63efb332ccb134c6953c0104.exe_ ./samples/

#USAGE: evasion_bin_detect.sh /path/to/samples /output/path/yarasigs

#TROUBLESHOOTING: for possible venv activation issues reinstall pip requirements cd ./yarGen/ && pip install -r requirements.txt

WORKING_PATH=$(pwd)

SAMPLE_PATH="$1/*"

echo "Processing from $1"

echo "Writing to $2"

for file in $SAMPLE_PATH

do

echo "$file"

#capa cannot handle packed files

CAPA_OUT=$(./capa $file)

if [[ $CAPA_OUT == *"Keylogging"* ]];

then

echo "Keylogging techniques found in $1"

echo $CAPA_OUT

echo "Starting yargen ..."

#trailing slash and path name trim

FILENAME=$(basename $file)

OUTPUTDIR=$(echo $2 | sed 's:/*$::')

INPUTDIR=$(echo $1 | sed 's:/*$::')

#yargen only scans folders. need to copy bin to tmp dir for runtime for only desired bins

#echo "copying $INPUTDIR/$FILENAME to /tmp/infected ..."

mkdir /tmp/infected

cp "$INPUTDIR/$FILENAME" /tmp/infected/

#Traditional source $WORKING_PATH/yarGen/bin/activate sometimes does not work

venv_activate() {

source $WORKING_PATH/yarGen/bin/activate

}

venv_activate

echo "yarGen python venv activated ..."

python3 ./yarGen/yarGen.py -m "/tmp/infected" -z 3 --excludegood --score --opcodes -o "$OUTPUTDIR/$FILENAME.yar"

#clean up

rm -rf /tmp/infected

fi

done

As you can tell from the logic that this is a stand alone script that assumes that yarGen and the Capa tool are within the working directory and performs a simple substring keyword search condition before moving the processing to yarGen for output.

Practicality and Scope

Before committing a transformation initiative; teams should “work backward” starting with their end or final state in mind before constructing the path towards it. In many minds, security teams want as much automation as possible in both threat detection and response with all tools working in harmony. Newer commercial solutions like: Security Orchestration and Automated Response (SOARs) can help bridge the gap.

However, SOARs do not solve all issues. This may include what platform your enterprise may support in the future due to End of Service Life (EOSL) requirements, computing scalability, and even inter-API flexibility based on the limits of a SOAR. For security teams looking to transform their legacy applications or workflows into the cloud, this usually comes down to (2) common practices: Containers or Serverless.

Moving multiple process streams into a non-heterogeneous cloud workflow can be time consuming and difficult to do. Focus on the need of your transformation to begin with.

Let’s assume our YARA generation automation is a high value security requirement.

Collect the pre-transformation struggles as a business objective. Here are some examples:

- Need to centralize processing and scale to meet demand as more threat hunters and detection engineers onboard to the organization

- The organization is retiring your on premise servers that typically host and process malware from its sandbox VLANs

- The existing security tools have integrated support for RESTful APIs but not running local scripts for automation

Deciding on a Tech Stack

Now that we have selected our example high value process to transform (automated YARA signatures based on keylogging malware); a decision is needed to re-platform and re-factor some or all of the code. Keep in mind that we are starting with a_ per process _scope and prioritization rather than try to automate the entire security operation program at once.

The following is a generic use case table to help security teams decide on one path or another for the general runtime stack:

| Architecture Type | Process Time Considerations | Code Refactoring Considerations | Event Drivers |

|---|---|---|---|

| Serverless | Short lived ephemeral <10 minutes of runtime | More work to move legacy code. By default, more limited in runtime environments out of the box. | Better for RESTful, HTTP, or gRPC and CSP native (likely JSON) service to service events as single serve functions in a state machine |

| Containers | Longer processing time requirements and increased local storage requirements | Less work, and can allow for leaving some code legacy untouched | Better for mixed code languages, raw network sockets, and event input and outputs that are custom or non-CSP native |

Some other key questions to ask ourselves during the transformation planning is the limitations of which resources are available to start with at the business level including:

- Time - How much time would it take security engineers to perform the refactoring

- Cost - Usually related to time but also in compute resourcing for the new desired runtime

- CSP - Which cloud service provider is allowed or generally used (e.g. AWS, GCP, Azure) and their service specific limitations for the entire Security Team’s suite of tools for future integration?

Transformation

For our example let’s assume the typical binary scanning and YARA rule generation per file can take up ~5-7 minutes a piece. In addition, a REST service is nice to have but not a requirement. The last major assumption is that a faster turnaround time is required for business and end of year goals for the team.

With the above assumptions, it is a good idea to select containerizing the function into its own service which can take in multiple batches of files depending on the client side preference choice. Since refactoring all the code is impractical for timeliness requirements (such as porting dependencies: CAPA and yarGen) we can wrap the shell scripts into network accessible service functions.

Raw Socket Method

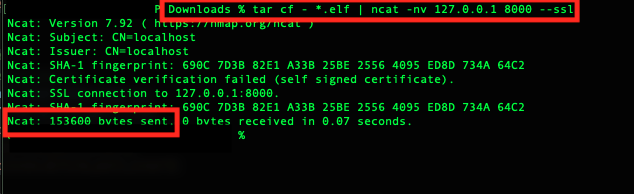

An easy win for engineers that have less experience or no need for a RESTful service can leverage the popular Nmap suite that contains netcat successor called Ncat. The newer Ncat versions allow for multiple connections in “daemon” mode without being attached to a Init.d or SystemD registered service.

Ncat

In addition, Ncat as a server and a client have modes to automatically generate ad-hoc SSL certificates allowing for clear text and secure communication. The following diagram is how the application logic changes:

How the application logic changes

The runtime for the socket Ncat “daemon” can accept multiple files by piping standard into the tar extract mechanism built into Linux:

ncat -nlkp 8000 –ssl | tar xf -

Analysts and engineers can pipe in raw tcp socket wrapped in SSL on the client side with a syntax like this:

tar cf - ./*.exe | ncat -n <container_vip> 8000 –ssl

The above syntax will “tar ball” all the files in the working directory with the exe extension. (Although this can be mixed binaries with our sample script leveraging Capa.)

Now that you have an easy mechanism for ingesting multiple binaries into a directory; a quick script in bash can be created that executes through a cron job to poll the directory that the Ncat extracted files are redirected to:

#!/usr/bin/env bash #on demand poll designed to be used with cron

#crontab -e */5 * * * * ./file_intake_poll.sh

FILE_COUNTER=$(ls -la ./file_intake/ | grep -i 'total ' | cut -d' ' -f2)

if [[ $FILE_COUNTER -ne 0 ]]; then

#echo "foo"

#replace sigs in using NFS compatible volume or port modify to port gs util cp gs://yourbucket

source ./keylogging-bin-detect.sh ./file_intake/ ./sigs

fi

#wait for keylogging-bin-detect to return allow for slow disks to catch up

sleep 10

#note in zsh you need to disable setopt rm_star_silent to stop double confirm when using wildcard

rm -rf ./file_intake/*

#clean up sigs if it isnt sent anywhere else

#rm -rf ./sigs/*

The cron job entry that can poll every 5 minutes to the directory running the script can be inserted similar to the following:

# Apply dos2unix or unix2dos is used if porting

*/5 * \* \* \* ./file_intake_poll.sh 2>&1

# An empty line is required at the end of this file for a valid cron file.

RESTful Method

Alternatively to using a raw TCP socket with Ncat, Python 3.x can be used with Flask as a RESTful API server. Functions are created to route the schema path and further de-couple the original script if needed. The following is sample code on how to a service that allows control for the binary upload mechanism and generation function at the same time without relying on cron job polling:

#!/usr/bin/env python3

#code modified from:

#ref https://pythonhosted.org/Flask-Uploads/

#ref https://stackoverflow.com/questions/39321540/how-to-process-an-upload-file-in-flask

from flask import Flask, request

from flask_uploads import UploadSet, configure_uploads

import os

app = Flask(__name__)

malbins = UploadSet(name='malbins', extensions=('exe', 'bin', 'elf', 'infected'))

app.config['UPLOADED_MALBINS'] = 'file_intake'

configure_uploads(app, malbins)

@app.route("/upload", methods = ['POST'])

def upload():

if request.method == 'POST' and 'user_file' in request.files:

filename = malbins.save(request.files['malbins'])

return "UPLOAD COMPLETE"

@app.route("/generate", methods=['GET'])

def generate():

result = str(os.popen("keylogging-bin-detect.sh /upload/ /sigs/"))

#create function for taking yara rules to somewhere else in future

return result

if __name__ == "__main__":

app.run(host='0.0.0.0', debug=True)

# older flash allows for adhoc cert with pyopenssl

# app.run(host='0.0.0.0', debug=True, cert=adhoc)

Replatform and Refactor

We can further de-couple the code and include different routes in a full-blown API schema that can separate the Capa filtering and yarGen processing as separate entities further removing the need for the original bash script.

However, because Capa is a local binary and yarGen was not designed to run as a Python import library; we need to continue the use of an OS level subprocess call to the file system. The level of effort for a full OpenAPI or Swagger specification would be a large effort for a very small return on investment.

In our transformation strategy, to re-platform requires augmenting our legacy code and retrofitting networking functionality. Simple refactoring of our stand alone script is more easily done using the Python Flask RESTful method.

Although Capa and yarGen may not be replaced in this phase, an additional function in the API can be created such as a copy mechanism to stage the YARA rule artifacts to another storage location or write to a NoSQL wide column database for future analytics.

Refactoring the original script by transferring some functionality to the Python Flask server can be done in one or more of the API functions. One way could be to extend the original upload function by running Capa and the Keylogging filter requirement before returning.

Additional logic would be required on the client side or server side to kick off the yargen process where we would also need to modify the ‘/generate’ endpoint. The example below adds additional logic to the file, further removing the away from the original bash script:

@app.route("/upload", methods = ['POST'])

def upload():

if request.method == 'POST' and 'user_file' in request.files:

filename = malbins.save(request.files['malbins'])

file_uploaded_name = str(request.files['filename'].name)

cmd_string = "capa " + file_uploaded_name

result = str(os.popen(cmd_string))

if "Keylogging" in result:

return "Keylogging Found Functionality Discovered"

For simplicity, migration speed, and our dependence on shell executed components; we will keep our original example RESTful method sample code intact.

Containerization

Either method of file ingestion and processing is acceptable. To complete the process transformation, we must wrap or “containerize” the legacy scripts with the additional socket or RESTful implementation mechanism in a build specification file. A popular container standard is Docker but there are others such as OpenShift. Here is an example dockerFile to build the image:

#Sample DockerFile for keylogging-bin-detect

FROM ubuntu:latest

#copy the keylogging-bin-detect files

COPY . ./

#set working dir and permissions

#WORKDIR /home/keylogging-bin-detect

RUN ["chmod", "0755", "keylogging-bin-detect.sh"]

RUN ["chmod", "0755", "file_intake_poll.sh"]

## SOCKET RUNTIME METHOD ##

#grab cron at 5 minute interval polls

RUN apt update && apt install ncat nmap cron -y

COPY ./file_poll_cron /etc/cron.d/file_poll_cron

RUN ["chmod", "0644", "/etc/cron.d/file_poll_cron"]

RUN ["crontab", "/etc/cron.d/file_poll_cron"]

RUN ["chmod", "0744", "/etc/cron.d/file_poll_cron"]

EXPOSE 8000

#use ncat socket level for file intake with ad hoc generated certs on port 8000

#tar cf - *.elf | ncat -nv <CONTAINER VIP> 8000 --ssl

ENTRYPOINT ["./run_ncat.sh"]

## RESTFUL API SERVER RUNTIME METHOD ##

#if the desire to run the restful server instead of the ncat and cron poll method

#RUN pip3 install Flask

#ENTRYPOINT [ "python3", "-m" , "flask", "run", "--host=0.0.0.0", "--port 8000"]

#CMD [ "python3", "-m" , "flask", "run", "--host=0.0.0.0", "--port 8000"]

In the above script you would simply comment out the method of choice and complete the build and run locally using a syntax such as:

docker build -t keylogging-bin-detect:latest -f ./dockerFile ./ && docker run -dp 8000:8000 keylogging-bin-detect

An example test of sending a binary to the container when using the raw socket method:

Sending a binary to the container

Runtime at Scale

Now that the docker image has been built it can be easily deployed to a AWS ECS or GCP GKE cluster for a robust service. A simple GCP example to utilize GKE after building your image can easily be done from Cloud Shell:

gcloud components install kubectl

gcloud artifacts repositories create my-security-tool-repo

--repository-format=docker

--location=us-central-1

--description="Example security too refactor repo"

docker build -t us-central-1-docker.pkg.dev/${PROJECT_ID}/my-security-tool-repo/keylogging-bin-detect:v1 .

gcloud auth configure-docker us-central-1-docker.pkg.dev

docker push us-central-1-docker.pkg.dev/${PROJECT_ID}/my-security-tool-repo/keylogging-bin-detect:v1

gcloud config set compute/zone us-central1-c

gcloud config set compute/region us-central-1

gcloud container clusters create-auto security-tools-cluster

gcloud container clusters get-credentials security-tools-cluster --zone us-central1-c

kubectl create deployment keylogging-bin-detect --image=us-central-1-docker.pkg.dev/${PROJECT_ID}/my-security-tool-repo/keylogging-bin-detect:v1

kubectl scale deployment keylogging-bin-detect --replicas=2

kubectl autoscale deployment keylogging-bin-detect --cpu-percent=80 --min=1 --max=3

Expanding on Shift Left

In our example, the legacy code did not automatically deploy the YARA rules to any remote artifact repositories such as Amazon S3 or GCP Storage buckets. In addition, the YARA rules were not deployed automatically to the endpoints that are in need of them. This section provides a brief example and possibility of expanding on the “shift left” DevSecOps culture.

Note: In production, you would create unit tests and send the YARA rules through a CI/CD pipeline that has a stage for validating the YARA rules for accuracy before the release to endpoints with other services. The example we provide is one of many possibilities.

To further extend our example, let’s assume that our Compute instances that need the rules use the Wazuh agent as the EDR solution that has native YARA rule integration. As of Wazuh 3.10.x and higher, endpoint security teams can queue and schedule commands. Cron jobs can be used to poll a repository of YARA rules such as a modification to our legacy example code to output to a S3 or GCP Storage bucket instead.

A sample XML configuration file is provided below to allow the YARA generated rules to be pulled from a GCP Storage bucket:

<wodle name="command">

<disabled>no</disabled>

<tag>test</tag>

<command>gsutil cp gs://YOURBUCKET/YARARULES/*.yar /path/to/endpoint/yararules && systemctl restart wazuh-agent</command>

<interval>1d</interval>

<ignore_output>no</ignore_output>

<run_on_start>yes</run_on_start>

<timeout>0</timeout>

</wodle>

Alternatively, a Chef recipe can be created using the standard built in cookbooks to deploy gsutil and run the copies on a polling interval as well:

#chef recipe deploy and poll#

execute 'initial_deploy_gsutil' do

command 'sudo apt-get install apt-transport-https ca-certificates gnupg && echo "deb [signed-by=/usr/share/keyrings/cloud.google.gpg] https://packages.cloud.google.com/apt cloud-sdk main" | sudo tee -a /etc/apt/sources.list.d/google-cloud-sdk.list && curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key --keyring /usr/share/keyrings/cloud.google.gpg add - && curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo tee /usr/share/keyrings/cloud.google.gpg && sudo apt-get update && sudo apt-get install google-cloud-cli'

end

cron 'threat-hunt-yararules' do

minute '30'

command 'gsutil cp gs://YOURBUCKET/YARARULES/*.yar /path/to/endpoint/yararules && systemctl restart wazuh-agent

end

Summary

In our example we learned how to take a simple bash based process, augment the process, and enable for TCP socket or RESTful connectivity. We also containerized the solution into a docker image and deployed the image as a container runtime on a GKE cluster. Finally, we provided possible extended example ways of deploying the YARA signatures to endpoints using Wazuh and Chef. Refactoring legacy code does not have to be an all or none endeavor. Security Engineers and analysts can take advantage of serverless and containerization in mixed environments and runtime configurations where the priority should be to transform the highest value processes first.