Using cloud native security features for defense in depth

Companies are re-examining their cloud security program this week. We’ve seen some great recommendations including updating your processes, auditing and trimming system permissions, and building security into CI/CD pipelines. These are a few of our favorites.

Other experts suggest you should buy bolt-on products for heuristics, anomaly detection, and data loss prevention. Indeed, the security industry has a virtually unlimited pile of things to sell.

But before you go shopping, let’s take a look at what you already have at your disposal to protect your data in the cloud.

In this article we highlight some AWS security controls that you can use now to protect your data with minimal cost. We’ll look at only AWS services and features that are already available to you. No procurement forms, and no statements of work.

We’ll images:

-

Server Access to S3 — IAM roles and S3 policies

-

Data Security — Upgrading your encryption to provide another layer of defense

-

Exfiltration Prevention — Use AWS networking features to limit outbound data loss

Practical Proactive Amazon S3 Security

Server Access to S3

Proper access control in the cloud is achieved by adhering to least privilege, that is, only to those people or computers who absolutely need to access sensitive data as part of their job function should have access.

Amazon EC2 instances commonly need access to data. In a typical example, an EC2 instance needs to access one object (file) in one bucket. Often, for convenience, a developer will open up all access to S3 from their instance so it can reach the bucket and do its job. The following policy is not recommended:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:*"

],

"Resource": ["*"]

}

]

}

Notice the “” in the policy which is called a wildcard. This means that the policy will give the instance full access (s3:) to every bucket or “resource”.

Instead, you should give this instance an IAM policy that is scoped to ONLY that bucket and object such as this:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetObject"

],

"Resource": ["arn:aws:s3:::my-bucket/my-object.conf"]

}

]

}

Or, minimally, to a specific bucket:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetObject"

],

"Resource": ["arn:aws:s3:::my-bucket/*"]

}

]

}

Breaches can be prevented by scoping down IAM policies for applications that run on virtual machines.

Data Security

Data security is a deep topic, touching all aspects of business from legal to tech. Understanding what data you have and where it lives is an ongoing challenge for some companies. We’ll keep the focus on options you have in AWS.

The court filing indicates CapitalOne tokenized some of the breached data, thereby making sensitive fields unreadable. This is an effective method to protect structured data, though less so on images and documents.

Files uploaded to S3 can be protected by using server-side encryption. We recently wrote a post about the variety of ways to encrypt data in S3. Here’s the takeaway: you can upgrade your encryption settings to provide another layer of data security.

AWS-Managed encryption is a popular choice. It’s free, and just a checkbox to enable server-side encryption in S3. It helps pass some compliance requirements without additional cost or operational impact.

However, AWS-managed encryption keys provides “transparent encryption” which is a process that automatically decrypts the S3 object if you have permissions to retrieve it. So transparent encryption won’t protect your files when access control is misconfigured.

I can see an AWS-managed default master key, but I can’t do much with it.

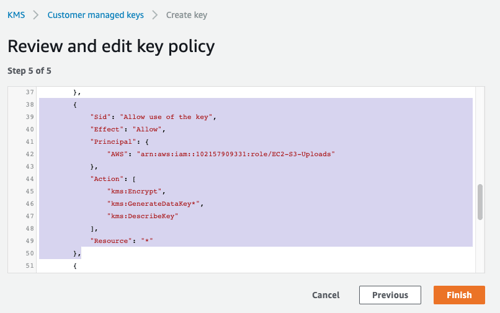

When you create and manage your own AWS KMS key, you can modify the access control policy. The console wizard helps build a basic policy, but you’ll still need to understand how your app uses the data on S3. Testing is advised.

Editing a KMS key policy in the AWS KMS Console

When configured with a least privilege policy, the KMS key policy can prevent unauthorized decryption resulting in a denied download request — even when the bucket policy allows public access. To enforce separation of duties, the key policy can be managed independently of the bucket policy.

Important: Assigning decrypt permissions to EC2 instances should be considered carefully for each application. An attacker can leverage the permissions assigned to a compromised EC2 instance.

Important: Using KMS to encrypt your data establishes a reasonable (example: ~$7/mo/key) but long-term monthly recurring cost. As your data grows, so does the impact of your KMS decisions. If your business is growing, we recommend adding key hierarchy and management topics to your encryption strategy.

Exfiltration Prevention

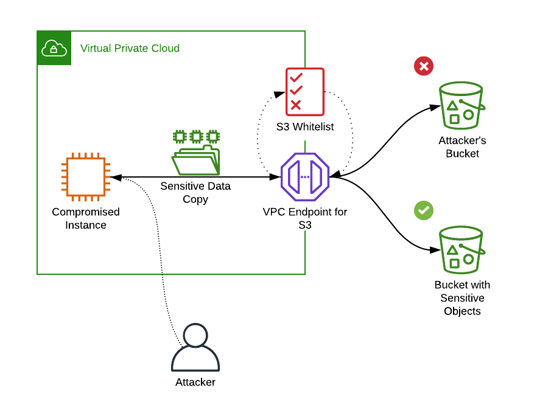

VPC Endpoints is an Amazon VPC networking feature that boosts performance and security by allowing your EC2 instances to access S3 data using a private network connection.

Attackers sometimes exfiltrate data to their S3 bucket in their AWS account. VPC Endpoints support an access control policy to restrict access to specific buckets, so you can create a whitelist to govern access to S3 for all of your systems in the VPC.

This VPC Endpoint policy allows uploading and downloading to a specific bucket, and only that bucket:

{

"Statement": [

{

"Sid": "Access-to-specific-bucket-only",

"Principal": "*",

"Action": [

"s3:GetObject",

"s3:PutObject"

],

"Effect": "Allow",

"Resource":

["arn:aws:s3:::my_secure_bucket",

"arn:aws:s3:::my_secure_bucket/*"]

}

]

}

Important: Using VPC Endpoints modifies the network path for access to S3. Be sure to check the documentation to understand how using VPC Endpoints can affect other AWS services that need access to S3: Amazon AppStream 2.0, AWS CloudFormation, CodeDeploy, Elastic Beanstalk, AWS OpsWorks, AWS Systems Manager, Amazon Elastic Container Registry, Amazon WorkDocs, or Amazon WorkSpaces.

Whitelisting S3 bucket access with a VPC Endpoint policy

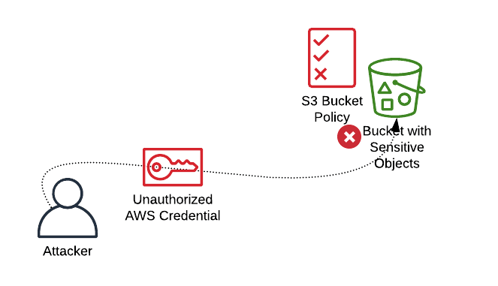

This is a helpful security control, but if attackers obtain AWS credentials they can pull the data from S3 over the Internet. For S3 buckets containing sensitive data, you can lock down access to just your VPC endpoint. The bucket will still be accessible from the systems in the VPC.

This S3 bucket policy allows access only from systems in a specific VPC:

{

"Statement": [

{

"Sid": "Access-to-specific-VPCE-only",

"Principal": "*",

"Action": "s3:*",

"Effect": "Deny",

"Resource":

["arn:aws:s3:::my_secure_bucket",

"arn:aws:s3:::my_secure_bucket/*"],

"Condition": {

"StringNotEquals": {

"aws:sourceVpce": "vpce-1a2b3c4d"

}

]

}

S3 buckets can restrict access to systems in a specific VPC

With these two policy settings, you’ve effectively connected a virtually isolated group of computers to a virtually isolated object store. VPC Endpoints provide similar guardrails for Amazon DynamoDB.

Closing Notes

“Secure” is a range on a spectrum that must be balanced with usability and business goals. With any security program, it’s good to start with the basics by ensuring that least privilege and data security are addressed in every system, whether it is in the cloud or on-premise.

Cloud tech is evolving at a seemingly impossible rate. It’s a lot for any security team to consume. We must learn from breaches. CapitalOne did many things right including:

-

Making a responsible vulnerability disclosure contact method easily accessible

-

Collecting and protecting event logs for evidence, including CloudTrail

-

Making their customers aware in a timely manner and providing necessary information to the public

Here’s to all the teams working hard to support the long game.

ScaleSec is a cloud security and compliance consultancy that specializes in securing cloud environments and helping organizations prepare for security audits.

We love working in cloud security and if you do too, we’d love to have you join our team. We’re hiring!