Modernizing Security: AWS Series - Security Best Practices for Amazon Elasticsearch - Part Two

Security Best Practices for Amazon Elasticsearch - Part Two

This is Part 2 of Security Best Practices for Amazon Elasticsearch. The guidance detailed in this blog is based on industry standard security best practices as well as our experiences with our customers. Many organizations have different compliance or regulatory requirements, security threat levels, or leverage Amazon Elasticsearch in different ways. Due to the varying degree of Elasticsearch usage, some of the security recommendations might not directly align with your business needs. For example, Kibana Multi-tenancy might not be applicable for your Organization because you do not have the need for sensitive dashboards. In Best Practices Part One, we covered infrastructure and IAM. Part 2 will images:

Encryption Recommendations

Utilize Encryption at Rest With Customer Managed Keys

Amazon Elasticsearch enables encryption at rest by default. This default setting uses an AWS managed customer master key (CMK) if fine-grained access control is turned on for your ES cluster. When encryption at rest is enabled, the following aspects of the domain are encrypted:

- Indices

- Elasticsearch logs

- Swap files

- All other data in the application directory

- Automated snapshots

Manual snapshots, slow logs, and error logs are not encrypted but there are workarounds to encrypt that data outside of ES.

AWS managed CMKs are the default key selected in the console for Amazon Elasticsearch and we recommend switching to a Customer managed customer master key (CMK). Customer managed CMKs provide more flexibility over AWS managed CMKs by providing the ability to create and edit their resource-policies and configure custom automatic rotation, among other options. By leveraging a Customer managed CMK you are undertaking more overhead to manage the key but having the added advantage of more flexibility and control.

Note: You cannot encrypt a non-encrypted domain and instead have to re-create the cluster with encryption enabled.

Enable Node-to-node Encryption for Inter-VPC Communications

The node-to-node encryption setting enables TLS 1.2 encryption for all communications between nodes in an ES cluster. This feature is available if you use Public access or VPC access for your ES domains. If this feature is not enabled, the communications between nodes are not encrypted and are vulnerable to man-in-the-middle attacks.

Similar to encryption at rest, node-to-node encryption is enabled by default if fine-grained access control is turned on for your ES cluster. When combined with the Amazon Elasticsearch feature to require HTTPS traffic to the domain, all ES traffic is encrypted to, from, and throughout the ES cluster.

Require HTTPS and TLS 1.2 on ES Cluster endpoints

When enabled, this feature requires that all traffic to an ES cluster endpoint be submitted over HTTPS. Anything sent over HTTP will be rejected and will never make it to the ES cluster to be analyzed. Additionally, we recommend configuring the minimum allowed TLS to be 1.2. These two configurations combined ensure that the traffic between the client and the domain is encrypted in a strong manner.

Logging and Monitoring Recommendations

Log, Monitor, and Alert for API Calls in AWS CloudTrail

Having visibility into actions taken against your ES cluster is a top recommendation because it allows you to act on specific events that may be deemed malicious in addition to having historical data in the event of a post mortem. Unfortunately, at this time only calls to the configuration API are logged and not calls to the ES APIs themselves. Nevertheless, enabling monitors and alerts for specific activities will allow you to keep track of actions taken by your users and respond accordingly. You can find a list of the configuration APIs that are logged as CloudTrail events here.

Enable ES Log Publishing to CloudWatch Logs

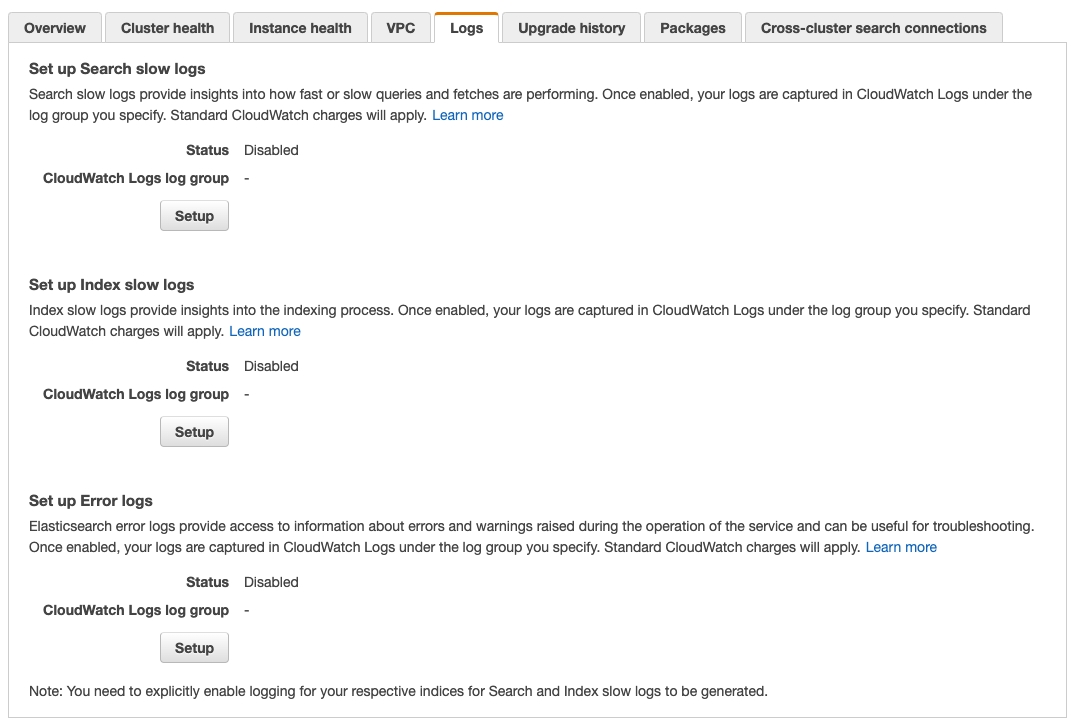

Amazon ES has three different log types that are configurable to send to Amazon CloudWatch Logs. The three log types are: error logs, search slow logs, and index slow logs. By default, these logs are not enabled or streamed to CloudWatch Logs. Error logs are beneficial to track invalid queries, challenges with snapshots or indexing, or script compilation issues. Slow logs are useful to track performance issues and it is recommended to send each log type to its own CloudWatch Logs group for better searching and debugging. Keep in mind that for slow logs you need to enable thresholds so that logging only begins once that timeframe is eclipsed.

Console screenshot of the Amazon ES Logs page

Enable Monitoring Alerts for Critical Events

In addition to sending ES logs to CloudWatch Logs, configuring monitors and alerts for logs is the logical next step. An alert for KMS can be configured in the event that the encryption key used for data at rest becomes disabled so you can quickly re-enable it. Other alerts regarding cluster status, index or storage issues, and CPU / memory errors are detailed here.

Configuration Recommendations

Use Kibana Multi-tenancy for Sensitive Objects

Kibana multi-tenancy is enabled by default and a tenant is a space for saving index patterns, visualizations, dashboards, and other Kibana objects. Multi-tenancy is beneficial if you need to share sensitive information with other Kibana users but do not want it exposed to all users. For example, you could create an Executive dashboard with visualizations that only management should have access to. Out of the box, Kibana only comes with two tenants: Private and Global. Using Kibana roles within fine-grained access and Kibana multi-tenancy you are able to assign specific permissions for tenants and create shared private dashboards.

Do Not Put Sensitive Information in URIs

Because Elasticsearch uses the domain and index names in addition to the document types and document IDs in its Uniform Resource Identifiers (URIs), we recommend that you do not put sensitive information into those fields. Doing so could accidentally expose sensitive data to anyone with access to view the logs that your application or server writes to a filesystem.

Avoid Split Brain Scenario

A split brain scenario is when an elasticsearch cluster has more than one master node and is now in an inconsistent state. A split brain ES cluster can lead to data integrity issues. Data integrity is essential for making smart data-driven decisions and accurate visualizations in Kibana. To avoid a split brain scenario, assign the correct number of eligible master nodes using the formula N/2 +1 where N is the total number of nodes in the cluster. This formula establishes a quorum-based majority to prevent multiple parts of a cluster from becoming the master node at the same time.

Conclusion

Throughout this article we reviewed and recommended multiple security best practices for Amazon Elasticsearch relating to encryption, networking, and general configurations. Thank you for reading and we hope that our recommendations were useful and you can now securely deploy and manage an Amazon Elasticsearch cluster with confidence.

Thanks to @Dustin Whited, @Sarah Gori, and John Porter for their editor contributions.

Connect with ScaleSec for AWS business

ScaleSec is an APN Consulting Partner guiding AWS customers through security and compliance challenges. ScaleSec’s compliance advisory and implementation services help teams leverage cloud-native services on AWS. We are hiring!

Connect with ScaleSec for AWS business.